-

- Posts: 1

- Joined: Mon Apr 21, 2014 3:34 pm

Linear regression with or without forced origin

Basic questions from students; resources for projects and reports.

17 posts

Page 1 of 2

I'm in the pharmaceutical industry, fairly new to HPLC and GC. I've been taught that in HPLC, we do not use zero as one of the data points in the calibration with linear regression for quantitation (assay / impurity content in wt% or area%). I thought it would be the same principle in GC for determination of solvent impurity, but I do not understand why I often see that either the zero point is included in the calibration or the regression is done with forced origin in some of the GC methods that I came across. I've never seen one done such way in HPLC. Why is there a difference? Is it "bad science / statistics" to force through the origin when the GC method calls for an injection of the diluent as the 0%? Thanks for your insight in advance.

-

- Posts: 3475

- Joined: Mon Jan 07, 2013 8:54 pm

- Location: Western Kentucky

katoblack wrote:

I'm in the pharmaceutical industry, fairly new to HPLC and GC. I've been taught that in HPLC, we do not use zero as one of the data points in the calibration with linear regression for quantitation (assay / impurity content in wt% or area%). I thought it would be the same principle in GC for determination of solvent impurity, but I do not understand why I often see that either the zero point is included in the calibration or the regression is done with forced origin in some of the GC methods that I came across. I've never seen one done such way in HPLC. Why is there a difference? Is it "bad science / statistics" to force through the origin when the GC method calls for an injection of the diluent as the 0%? Thanks for your insight in advance.

I have never used a 0 calibration level on any chromatographic system GC or LC. When I was working on metals analysis with ICP-OES and ICP-MS those methods call for a 0 level and I still hated using it. Even in those methods usually if you look closely at the shape of the curve near the zero point, it just does not fit correctly and to me gives bogus results.

I have though sometimes wanted to forced zero with the linear calibration because I also hate when the calibration can give a negative result. How can anyone say it is acceptable to report a concentration of -5mg/ml for example? Especially when you have a positive area/peak height for that analyte. The official methods though will tell you not to force zero, but that still can leave you with a negative result depending on the slope of your line.

Another thing I have trouble resolving in my mind is when a method strictly forbids blank subtraction but then tells you to include a zero point in your calibration. Isn't that essentially blank subtracting using the calibration equations instead of just plain subtraction of a blank value?

The past is there to guide us into the future, not to dwell in.

-

- Posts: 2071

- Joined: Tue Aug 17, 2004 7:59 pm

- Location: 38.590967, -90.213236

search and you will find several detailed discussions of approaches to this query.

Thanks,

DR

DR

-

- Posts: 1861

- Joined: Fri Aug 08, 2008 11:54 am

There was a good LC-GC article about it some years ago.

Statistically, the conclusion was that it's OK to force the line through the origin provided that it passes within a statistically-insignificant distance of the origin anyway (in which case, frankly, it's pretty much pointless to do so, because it makes no difference).

The next option after forcing through the origin is using the origin as a data-point (without any data! Just including 0, 0 in the regression). This is a halfway house with no merits whatsoever. It doesn't deal with the problem of a curve that doesn't pass through the origin, but it contaminates real data with an artificial point, potentially pulling the calibration curve away from the measured points in the region where data were collected.

The third option is a genuine zero level standard, but this, I think, is unhelpful (for the same reason as using a 0,0 artificial point: it's not going to get rid of the problem), and flawed for two reasons. Firstly, it's a point that is below the limit of quantification, so you are sacrificing the fit of the line over the region in which you will quantify, for the sake of a point outside the region where you'd consider quantifying. Secondly, it's biased. Most integrators, most of the time, produce only positive peaks. The noise in and around a genuine peak can both increase the area or decrease the area, so it doesn't bias the mean. The noise on a non-existant peak in a zero-level injection can only give rise to a positive peak (because that's what integrators do), so it's biased upwards. But the effect is trivial.

The correct thing to do is to establish limits over which you feel able to quantify, and create a calibration curve over that range, and completely ignore what the curve does outside that range (but equally, do not report any result outside that range, except as <min or >max, whatever min and max are).

Having said all that, the reason why people force to the origin (and yes, I do it fairly often too, in an academic environment) is that bosses don't like values like -5uM. Negative values can't be right, and they do nasty things in graph-plotting programs. The situation where the negative values arise is that someone has samples that are genuine negative controls, or a genuine complete knock-out with a chemical missing altogether. Surprisingly, in some circles, measured nearly-zeroes are treated with greater respect than "< LOD", even if you've quantified the LOD quite carefully (graph plotting programs can only handle numbers; how do you plot "< LOD"??). So we produce results that are probably wrong, but which are numerically positive and very small, which isn't a real lie or deception, because ultimately they are published as an invisibly-small bar on a bar-chart, compared to nice big bars (with sensible errors and statistics attached to them), so the overall story is actually presented (reasonably) truthfully.

Statistically, the conclusion was that it's OK to force the line through the origin provided that it passes within a statistically-insignificant distance of the origin anyway (in which case, frankly, it's pretty much pointless to do so, because it makes no difference).

The next option after forcing through the origin is using the origin as a data-point (without any data! Just including 0, 0 in the regression). This is a halfway house with no merits whatsoever. It doesn't deal with the problem of a curve that doesn't pass through the origin, but it contaminates real data with an artificial point, potentially pulling the calibration curve away from the measured points in the region where data were collected.

The third option is a genuine zero level standard, but this, I think, is unhelpful (for the same reason as using a 0,0 artificial point: it's not going to get rid of the problem), and flawed for two reasons. Firstly, it's a point that is below the limit of quantification, so you are sacrificing the fit of the line over the region in which you will quantify, for the sake of a point outside the region where you'd consider quantifying. Secondly, it's biased. Most integrators, most of the time, produce only positive peaks. The noise in and around a genuine peak can both increase the area or decrease the area, so it doesn't bias the mean. The noise on a non-existant peak in a zero-level injection can only give rise to a positive peak (because that's what integrators do), so it's biased upwards. But the effect is trivial.

The correct thing to do is to establish limits over which you feel able to quantify, and create a calibration curve over that range, and completely ignore what the curve does outside that range (but equally, do not report any result outside that range, except as <min or >max, whatever min and max are).

Having said all that, the reason why people force to the origin (and yes, I do it fairly often too, in an academic environment) is that bosses don't like values like -5uM. Negative values can't be right, and they do nasty things in graph-plotting programs. The situation where the negative values arise is that someone has samples that are genuine negative controls, or a genuine complete knock-out with a chemical missing altogether. Surprisingly, in some circles, measured nearly-zeroes are treated with greater respect than "< LOD", even if you've quantified the LOD quite carefully (graph plotting programs can only handle numbers; how do you plot "< LOD"??). So we produce results that are probably wrong, but which are numerically positive and very small, which isn't a real lie or deception, because ultimately they are published as an invisibly-small bar on a bar-chart, compared to nice big bars (with sensible errors and statistics attached to them), so the overall story is actually presented (reasonably) truthfully.

-

- Posts: 902

- Joined: Thu Apr 18, 2013 2:10 pm

It has never made sense to me to use a constant (the y-intercept) that is actually determined by the average values of x and y in the calibration set. My choice for the concentrations of my standards dictates the y-intercept when I use the model: y = mx + b.

From a physical standpoint, it makes no sense to me that a point so far from zero concentration should dictate what happens down there. Physically, zero detector response at zero concentration makes sense. That's why I generally force my data to fit through zero intercept. My detection limit is not dictated by the y-intercept. It's dictated by a signal for my analyte that shows a S/N ratio of 3.

If I want to use the 2-parameter fit, I try to come up with a blank so that it's more legitimate to detect the response at zero concentration and I add it to the calibration standards.

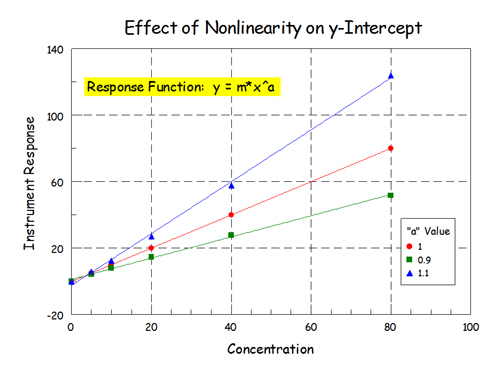

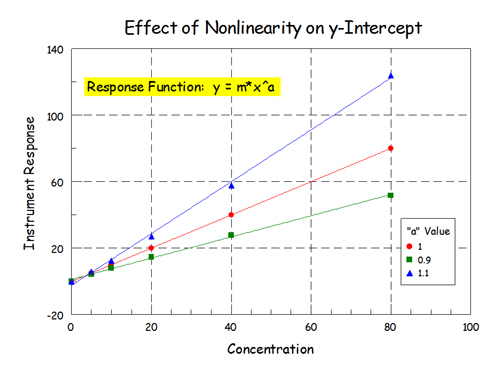

I did this little experiment awhile back. I added some nonlinearity to some data by using the function: y = m*x^a, where a is a constant. y = instrument response, x = concentration, m = response factor. For a straight line a = 1. I calculated the y values given the x values (m = 1) and then fit that to a straight line (2-parameter, linear-least squares).

This is the full view of the data:

Full View by rb6banjo, on Flickr

Full View by rb6banjo, on Flickr

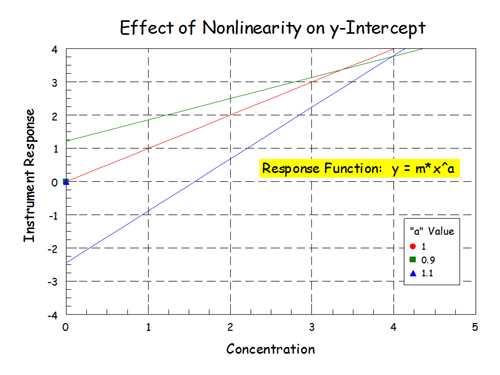

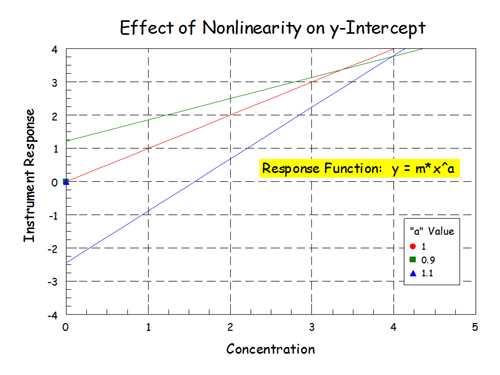

If you expand what's going on at x = 0:

Expanded View by rb6banjo, on Flickr

Expanded View by rb6banjo, on Flickr

Add a little nonlinearity to the data and fairly big things happen down at zero. The fit of the linear model to the data was still pretty good but physically nonsensical things happen down at zero concentration - particularly when the data is nonlinear and slightly concaved up.

The intercept is essentially a fudge factor. If you propagate the error on the functions used to determine the slope and intercept in linear-least squares fitting, you will find that a HUGE proportion of the error is tied up in that intercept. If you have a large nonzero intercept in analytical chemistry, I think there are bigger problems with your calibration data elsewhere. Try to find them. If we are really concerned about what really happens down there near zero concentration, we should put those dilute calibration standards in the mix and see what happens.

Just my $0.02.

From a physical standpoint, it makes no sense to me that a point so far from zero concentration should dictate what happens down there. Physically, zero detector response at zero concentration makes sense. That's why I generally force my data to fit through zero intercept. My detection limit is not dictated by the y-intercept. It's dictated by a signal for my analyte that shows a S/N ratio of 3.

If I want to use the 2-parameter fit, I try to come up with a blank so that it's more legitimate to detect the response at zero concentration and I add it to the calibration standards.

I did this little experiment awhile back. I added some nonlinearity to some data by using the function: y = m*x^a, where a is a constant. y = instrument response, x = concentration, m = response factor. For a straight line a = 1. I calculated the y values given the x values (m = 1) and then fit that to a straight line (2-parameter, linear-least squares).

This is the full view of the data:

Full View by rb6banjo, on Flickr

Full View by rb6banjo, on FlickrIf you expand what's going on at x = 0:

Expanded View by rb6banjo, on Flickr

Expanded View by rb6banjo, on FlickrAdd a little nonlinearity to the data and fairly big things happen down at zero. The fit of the linear model to the data was still pretty good but physically nonsensical things happen down at zero concentration - particularly when the data is nonlinear and slightly concaved up.

The intercept is essentially a fudge factor. If you propagate the error on the functions used to determine the slope and intercept in linear-least squares fitting, you will find that a HUGE proportion of the error is tied up in that intercept. If you have a large nonzero intercept in analytical chemistry, I think there are bigger problems with your calibration data elsewhere. Try to find them. If we are really concerned about what really happens down there near zero concentration, we should put those dilute calibration standards in the mix and see what happens.

Just my $0.02.

-

- Posts: 1861

- Joined: Fri Aug 08, 2008 11:54 am

Yes, if you fit a straight line through a curved set of points, it won't fit very well, especially at the ends, and the y-axis intercept will be wrong. Yes, the choice of concentrations you make will influence the y-axis intercept if the curve isn't a straight line.

But that's normal and OK. What you are essentially doing is making a simplified model that part of the overall curve is nearly straight, straight enough for you to use the simplified model rather than fit a curve. The simplified model is only applicable over the narrow range where it's tested to fit adequately. The y-axis intercept must be accepted as non-zero or the line won't fit the points in the range you're using, but no one should be using the model anywhere near x=0, so it doesn't matter that the value there would be a bad fit to any measured data, were you to measure that low.

This is a consquence of making a local straight-line model to a global curve.

Personally, I prefer to fit a curve if the points are on a genuine curve. Summary: whatever approach you adopt to calibration, the line must pass close to all the points, and the quantitation is only as good as the closeness of the line to the points. The calibration curve can only be used between its lowest and highest well-fitting points, and interpolation carries the usual warnings.

But that's normal and OK. What you are essentially doing is making a simplified model that part of the overall curve is nearly straight, straight enough for you to use the simplified model rather than fit a curve. The simplified model is only applicable over the narrow range where it's tested to fit adequately. The y-axis intercept must be accepted as non-zero or the line won't fit the points in the range you're using, but no one should be using the model anywhere near x=0, so it doesn't matter that the value there would be a bad fit to any measured data, were you to measure that low.

This is a consquence of making a local straight-line model to a global curve.

Personally, I prefer to fit a curve if the points are on a genuine curve. Summary: whatever approach you adopt to calibration, the line must pass close to all the points, and the quantitation is only as good as the closeness of the line to the points. The calibration curve can only be used between its lowest and highest well-fitting points, and interpolation carries the usual warnings.

-

- Posts: 902

- Joined: Thu Apr 18, 2013 2:10 pm

Regarding not operating near zero concentration. Maybe you're right for the QA world of pharmaceuticals. Most of the time, you're probably operating in the middle of the calibrated range, far from zero concentration. But for those of us who are looking for quantitative numbers on flavor-active chemicals, we nearly always operate on the near-zero-concentration end of the calibration curve. It's very important how the instrument behaves down there and how the recorded data is handled.

-

- Posts: 185

- Joined: Fri Jul 20, 2012 9:18 am

But for those of us who are looking for quantitative numbers on flavor-active chemicals, we nearly always operate on the near-zero-concentration end of the calibration curve. It's very important how the instrument behaves down there and how the recorded data is handled.

That's why there is a LOD and a LOQ.

E.g.:

If you have samples in a 0-5ng/l range, there is no use in making a calibration curve of 0-500ng/l

It does make sense to make a 0-10ng/l curve by using enough points that are equally spaced over the range.

-

- Posts: 1861

- Joined: Fri Aug 08, 2008 11:54 am

That is also the very reason why I added a paragraph explaining why I, like many other people, do make use of calibration curves forced through the origin. I do it where some samples contain less than the limit of detection, but the person who will interpret the data cannot tolerate negative numbers. I warn them that the method is basically returning a "not detected", or at least "low-and-unquantifiable" result, so the actual number is meaningless.

The numbers we get when looking close to the zero-end are only of any meaning whatsoever when compared to numbers that are genuinely measurable, falling within the range of the calibration curve. If all of the numbers are close to zero and below the calibration curve, then either the calibration curve isn't over the right range, or the compound is basically unmeasurable and the work is doomed to failure.

Yes, it is very important to choose a relevant range for the calibration curve. I agree completely with BMU_VMW.

Yes, if the samples are in the range 0-5ng/L then a calibration curve up to 10ng/L is good. If the lowest concentration sample has a discernable peak, then it is above the limit of detection, and we can include standards at this level in our calibration curve, so the sample falls within the range of the calibration curve. A calibration curve only needs an integratable peak; it doesn't need a peak whose area is reliably accurate to less than X%. We can also find, from the standards or from the calibration curve, the point at which we expect X% accuracy, and give this as our limit of quantification, in which case all measured points below this aren't quantitatively reliable ("X" in X% should be chosen to reflect what precision you need). It doesn't matter what area you work in, or how inconveniently dilute the samples are, samples below the limit of detection might or might not contain the chemical, you cannot tell; and samples below the limit of quantitation probably do, but you cannot say with adequate precision how concentrated they are. Of course "adequate" is subjective, as is the limit of quantitation (despite the concrete definitions we tend to use). If you really need to know the answer +/- 2% the limit will be far higher than if +/- 10% or 30% were adequate for the purpose.

The numbers we get when looking close to the zero-end are only of any meaning whatsoever when compared to numbers that are genuinely measurable, falling within the range of the calibration curve. If all of the numbers are close to zero and below the calibration curve, then either the calibration curve isn't over the right range, or the compound is basically unmeasurable and the work is doomed to failure.

Yes, it is very important to choose a relevant range for the calibration curve. I agree completely with BMU_VMW.

Yes, if the samples are in the range 0-5ng/L then a calibration curve up to 10ng/L is good. If the lowest concentration sample has a discernable peak, then it is above the limit of detection, and we can include standards at this level in our calibration curve, so the sample falls within the range of the calibration curve. A calibration curve only needs an integratable peak; it doesn't need a peak whose area is reliably accurate to less than X%. We can also find, from the standards or from the calibration curve, the point at which we expect X% accuracy, and give this as our limit of quantification, in which case all measured points below this aren't quantitatively reliable ("X" in X% should be chosen to reflect what precision you need). It doesn't matter what area you work in, or how inconveniently dilute the samples are, samples below the limit of detection might or might not contain the chemical, you cannot tell; and samples below the limit of quantitation probably do, but you cannot say with adequate precision how concentrated they are. Of course "adequate" is subjective, as is the limit of quantitation (despite the concrete definitions we tend to use). If you really need to know the answer +/- 2% the limit will be far higher than if +/- 10% or 30% were adequate for the purpose.

-

- Posts: 3475

- Joined: Mon Jan 07, 2013 8:54 pm

- Location: Western Kentucky

lmh wrote:

That is also the very reason why I added a paragraph explaining why I, like many other people, do make use of calibration curves forced through the origin. I do it where some samples contain less than the limit of detection, but the person who will interpret the data cannot tolerate negative numbers. I warn them that the method is basically returning a "not detected", or at least "low-and-unquantifiable" result, so the actual number is meaningless.

The numbers we get when looking close to the zero-end are only of any meaning whatsoever when compared to numbers that are genuinely measurable, falling within the range of the calibration curve. If all of the numbers are close to zero and below the calibration curve, then either the calibration curve isn't over the right range, or the compound is basically unmeasurable and the work is doomed to failure.

Yes, it is very important to choose a relevant range for the calibration curve. I agree completely with BMU_VMW.

Yes, if the samples are in the range 0-5ng/L then a calibration curve up to 10ng/L is good. If the lowest concentration sample has a discernable peak, then it is above the limit of detection, and we can include standards at this level in our calibration curve, so the sample falls within the range of the calibration curve. A calibration curve only needs an integratable peak; it doesn't need a peak whose area is reliably accurate to less than X%. We can also find, from the standards or from the calibration curve, the point at which we expect X% accuracy, and give this as our limit of quantification, in which case all measured points below this aren't quantitatively reliable ("X" in X% should be chosen to reflect what precision you need). It doesn't matter what area you work in, or how inconveniently dilute the samples are, samples below the limit of detection might or might not contain the chemical, you cannot tell; and samples below the limit of quantitation probably do, but you cannot say with adequate precision how concentrated they are. Of course "adequate" is subjective, as is the limit of quantitation (despite the concrete definitions we tend to use). If you really need to know the answer +/- 2% the limit will be far higher than if +/- 10% or 30% were adequate for the purpose.

If only we could get auditors and permit writers to understand this! (and people at the EPA who approve methods)

We have had many auditors who demand we set our Method Detection Limits by using the CFR method where you run 7 replicates the multiply the standard deviation by the Student T (3.14) and state that as your MDL. Then they ask why we can not analyze a standard at that concentration and quantify it within the same tolerances as one that is at the middle of our calibration curve.

So many times the people evaluating the data believe it should be just like reading a ruler, definite beginning and ending of measurements and same accuracy and precision throughout the entire range.

A good example of why you can't really use a true zero point in a calibration is one I ran into several years ago when asked to help a lab tech with a colormetric test. He could not figure out why his low calibration check standard was failing. When I looked at his data, the zero point, and the first two calibration standards all had the exact same absorbance reading, then the rest of the standards had absorbance values that increased in a linear fashion. Obviously the first two standards were concentrations below what the instrument could measure and therefore what they were calling the detection limit was a false number because it was below the lowest calibration standard. The curve covered a wide enough range that even with the bad points the linear curve fit was still 0.997 which made it look as if it was passing.

For chromatography you can not calculate a concentration that is below what would give an actual peak on the chromatogram, and it that value is above zero then it would not be logical to place any point into the calibration curve below the lowest concentration that would give a determinable peak. To me for it to be scientifically valid you would need to take your calibration curve from the point of the lowest determinable peak up to the highest expected sample concentration or highest amount the detector can determine reliably(whichever is lower) and only quantify results that fall between those points. The problem comes for us when the end users of the data and the regulators are non-scientists and non-chromatographers and begin to use statistics to try to extend the range of usable data beyond what the instrument can actually determine. Statistically the above mentioned incident had valid data, but I would never stand in a courtroom and defend any result below the lowest positive calibration response no matter what the statistics said it.

The past is there to guide us into the future, not to dwell in.

-

- Posts: 1861

- Joined: Fri Aug 08, 2008 11:54 am

James_Ball wrote:

... snip ...

For chromatography you can not calculate a concentration that is below what would give an actual peak on the chromatogram, and it that value is above zero then it would not be logical to place any point into the calibration curve below the lowest concentration that would give a determinable peak. To me for it to be scientifically valid you would need to take your calibration curve from the point of the lowest determinable peak up to the highest expected sample concentration or highest amount the detector can determine reliably(whichever is lower) and only quantify results that fall between those points. The problem comes for us when the end users of the data and the regulators are non-scientists and non-chromatographers and begin to use statistics to try to extend the range of usable data beyond what the instrument can actually determine. Statistically the above mentioned incident had valid data, but I would never stand in a courtroom and defend any result below the lowest positive calibration response no matter what the statistics said it.

I couldn't have put it better, and couldn't agree more. I have the luxury of working in an academic science environment where we don't have to obey arbitrary rules. Of course it makes sense for us to be aware of the working-standards used in industry, and deliberately deviate when appropriate, rather than merely fail to work to standards through ignorance!

-

- Posts: 1680

- Joined: Sat Aug 23, 2008 12:04 am

- Location: Alpharetta, GA

A couple of thoughts:

First, what does the non-zero intercept tell us? A positive non-zero intercept may tell us that there is a background signal from a background from the analyte or from some interfering compund. A negative non-zero intercept may tell us there is an issue such as GC inlet activity that consumes a small portion of compund with every injection. Or, the non-zero indercept may be the result of trying to fit data that curves from detector saturation to a linear fit. If the non-zero intercept tells us that there is somethign wrong with the chemistry - in sample prep, chromatography, or detection, then perhaps we need to think about the chemistry before we address the line. But, we nee to know if that information has practical value.

Second thought - a term that comes in from the business side: "Fit for Purpose"

What objectives are you trying to meet and who is your customer? Our analytical method has to meet two goals - one is to give true and accurate answers. The second it answer the question asked by the user of the data. And sometimes it deals with truth as defined by others (like auditors) - this becomes part of the question.

And even if you are the user of the data, make sure you understand the question you are askign of the analytical method.

First, what does the non-zero intercept tell us? A positive non-zero intercept may tell us that there is a background signal from a background from the analyte or from some interfering compund. A negative non-zero intercept may tell us there is an issue such as GC inlet activity that consumes a small portion of compund with every injection. Or, the non-zero indercept may be the result of trying to fit data that curves from detector saturation to a linear fit. If the non-zero intercept tells us that there is somethign wrong with the chemistry - in sample prep, chromatography, or detection, then perhaps we need to think about the chemistry before we address the line. But, we nee to know if that information has practical value.

Second thought - a term that comes in from the business side: "Fit for Purpose"

What objectives are you trying to meet and who is your customer? Our analytical method has to meet two goals - one is to give true and accurate answers. The second it answer the question asked by the user of the data. And sometimes it deals with truth as defined by others (like auditors) - this becomes part of the question.

And even if you are the user of the data, make sure you understand the question you are askign of the analytical method.

-

- Posts: 5329

- Joined: Thu Oct 13, 2005 2:29 pm

- Location: Maun Botswana

And to add to Don's list of what a non-zero intercept tells us;

- that there was more or less of the substance in the calibration mixture than we thought. This can be because the calibration matrix already had some substance in it before we added any, or because the substance sticks to the container, or is unstable in some way, or it can be due to errors in e.g. weighing, dispensing and dilution.

As Don says, we need to investigate these causes before we start bending the line to fit.

Peter

- that there was more or less of the substance in the calibration mixture than we thought. This can be because the calibration matrix already had some substance in it before we added any, or because the substance sticks to the container, or is unstable in some way, or it can be due to errors in e.g. weighing, dispensing and dilution.

As Don says, we need to investigate these causes before we start bending the line to fit.

Peter

Peter Apps

-

- Posts: 1108

- Joined: Wed Dec 22, 2010 10:17 pm

- Location: Chicago

Adding a weighing factor to the residuals is more valid than forcing zero.

When doing a simple linear regression all residuals have the same weight. As a result to get the points on a line the math is just as likely to move the points on the Y axis equal to 1ppm at 1ppm as at 1000ppm. In statistical terms it assumes homoscedacity of the errors. More often the overall error is not absolute it is relative say 3% of the value. This is why you see a lot of inaccuracy at the bottom of the calibration range.

Forcing zero often helps because you are locking down the bottom of the calibration curve but as I said weighting it inversely proportional to ammount or even inversely proportional to ammount squared is usually better and more valid especially when the calibration spans more than 10 or 20 fold.

When doing a simple linear regression all residuals have the same weight. As a result to get the points on a line the math is just as likely to move the points on the Y axis equal to 1ppm at 1ppm as at 1000ppm. In statistical terms it assumes homoscedacity of the errors. More often the overall error is not absolute it is relative say 3% of the value. This is why you see a lot of inaccuracy at the bottom of the calibration range.

Forcing zero often helps because you are locking down the bottom of the calibration curve but as I said weighting it inversely proportional to ammount or even inversely proportional to ammount squared is usually better and more valid especially when the calibration spans more than 10 or 20 fold.

-

- Posts: 5329

- Joined: Thu Oct 13, 2005 2:29 pm

- Location: Maun Botswana

Something else that really should be taken into account, but is often forgotten is the error along the x-axis. How accurately can we determine the known content of the standard by weighing and volumetry ? This error is almost certainly relatively larger at the bottom end of the calibration where standard concentrations are lowest.

Peter

Peter

Peter Apps

17 posts

Page 1 of 2

Who is online

In total there is 1 user online :: 0 registered, 0 hidden and 1 guest (based on users active over the past 5 minutes)

Most users ever online was 1117 on Mon Jan 31, 2022 2:50 pm

Users browsing this forum: No registered users and 1 guest

Most users ever online was 1117 on Mon Jan 31, 2022 2:50 pm

Users browsing this forum: No registered users and 1 guest

Latest Blog Posts from Separation Science

Separation Science offers free learning from the experts covering methods, applications, webinars, eSeminars, videos, tutorials for users of liquid chromatography, gas chromatography, mass spectrometry, sample preparation and related analytical techniques.

Subscribe to our eNewsletter with daily, weekly or monthly updates: Food & Beverage, Environmental, (Bio)Pharmaceutical, Bioclinical, Liquid Chromatography, Gas Chromatography and Mass Spectrometry.

- Follow us on Twitter: @Sep_Science

- Follow us on Linkedin: Separation Science